Human Instrumentation and Robotics (HIR) Lab

The mission of our lab is to quantify, understand, and distinguish human movement via wearable technologies to better interpret performance, health, and behavior with applications in human-robot interaction. This research is necessarily interdisciplinary and draws from the fields of engineering dynamics, signal processing, robotics, biomechanics, controls, and human factors, to name a few. One of the many challenges associate with this type of work is a comprehensive definition or metric for performance cannot be defined; in fact, most applications require a task-specific definition of performance that has both physical and operational meaning to the stakeholders involved. Below are examples of projects our lab will be pursuing in the near future including a continuation of my postdoctoral research, which has a corresponding website and paper linked below where you can learn more.

Stewart Platform Digital Twin

Collaborators: Casey Harwood, Austin Krebill

The challenge of designing submersible underwater vehicles and characterizing its associated hydrodynamic forces remains an open research question. With the aid of a six-degree-of-freedom parallel manipulator known as a Stewart platform, or a hexapod, parameters like added mass can be strategically and accurately estimated. The digital twin for the platform, which simulates the kinematics and dynamics of the machine, ensures its motions stay within the physical limitations of its design. This project also aims to design, model, and control a miniature version of the hexapod for education and research purposes.

QR Code

Use a camera to interact with a virtual and/or augmented reality model of the hexapod.

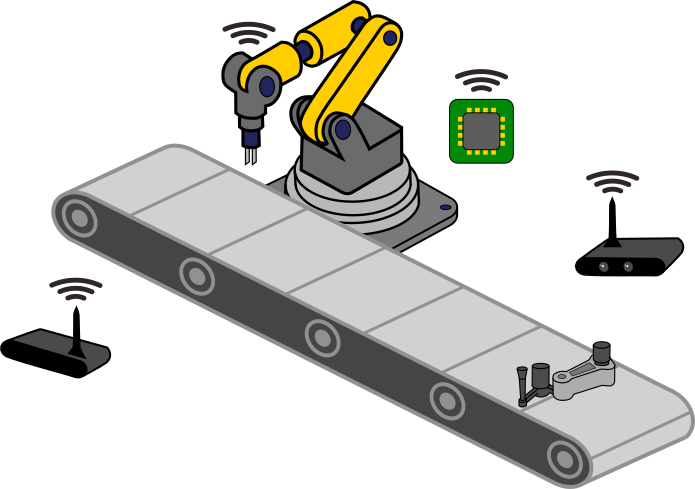

Synthetic Data Generation for Deep Learning Training

One of the most challenging aspects of any deep learning application is gathering and preparing the necessary datasets required for effective training of complex models. The question of “how much data is enough?” remains an open question, though there are some commonly cited rules for the minimum size for the training dataset like 10 times the amount of features, 100 times the amount of classes, or 10 times the amount of trainable parameters. While these rules are useful guidelines, the quality of the dataset is invariably an important factor. This project investigates the feasibility and performance of training these models on synthetic images and/or augmented CAD model images in the context of advancing automated manufacturing.

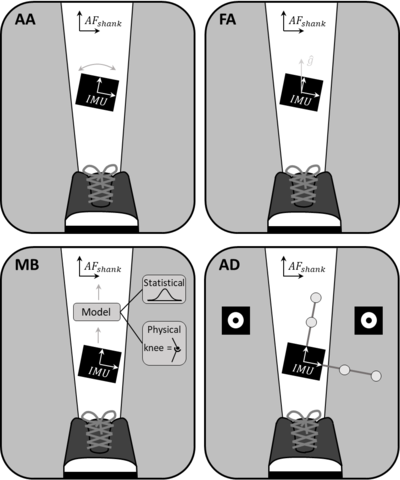

Determining Anatomical Frames of Reference for Inertial Motion Capture

Despite the exponential growth in using IMUs for biomechanical studies, future growth in inertial motion capture is stymied by a fundamental challenge - how to estimate the orientation of underlying bony anatomy using skin-mounted IMUs. This challenge is of paramount importance given the need to deduce the orientation of the bony anatomy to estimate joint angles. Unfortunately, a convention does not yet exist for how to define these anatomical frames for inertial motion capture and the four current approaches in the literature produce significantly different estimates for these frames to a degree that renders it difficult, if not impossible, to compare results across studies.

Consequently, a significant need remains for creating, validating, and adopting a standard for defining anatomical axes via inertial motion capture to fully realize this technology’s potential for biomechanical studies. This project will focus on how to accomplish this in a way that is repeatable and reproducible across testing sessions, subject populations, and technologies. The approach will focus on simulating IMU data from optical motion capture data and developing kinematic biomechanical constraints conducive to IMU data to automatically detect these reference frames.